Managing Cost and Usage Reports (Data Exports) in AWS

Learn how to set up and query your AWS Cost and Usage Reports (Data Exports)

In today’s post, I am going to show you how to enable Cost and Usage reports for your AWS account/organization and how to query them to get valuable insights.

We are going to enable CUR 2.0 and set up an Infrastructure via Terraform to do the following:

Set up Amazon Athena

Create a Glue Crawler to catalog our data

Create a Lambda to send us monthly reports based on some SQL queries

This is a Level 200 post, following along with the post and deploying the infrastructure to your AWS account will cost approximately ~$1 per month (not including the CUR reports in S3)

If you haven’t seen my previous post about Amazon Athena, I would recommend doing so, since I will not dive deep into that section.

What is CUR, and what is CUR 2.0

CUR is AWS's Cost and Usage Reports, and this name is now deprecated. The official name of the service is Data Exports. With Data Exports, you can extract AWS accounts’/orgs’ data to S3 in a readable and queryable format. There are many flavors of exports as mentioned below:

Cost and Usage Report 2.0 (CUR 2.0)

Enhanced, detailed cost and usage data. Recommended over legacy CUR.Cost Optimization Recommendations

Data from the Cost Optimization Hub to identify savings opportunities.FOCUS 1.0 with AWS Columns

Structured cost data export aligned with the FOCUS standard.Carbon Emissions

Track AWS carbon footprint data for sustainability reporting.Cost and Usage Dashboard (QuickSight)

Pre-built dashboard export for cost visualization in Amazon QuickSight.Legacy Cost and Usage Report (Legacy CUR)

Older CUR format, supported with different API actions.

We will focus today on the CUR 2.0 reports, but after this post, you can enable whichever data export, follow the same process, use the same infrastructure, and query your data as you did with CUR 2.0.

Cost and Usage Reports 2.0 provides the following improvements over Cost and Usage Reports (Legacy):

Consistent schema: CUR 2.0 contains a fixed set of columns, whereas the columns included for CUR can vary monthly depending on your usage of AWS services, cost categories, and resource tags.

Nested data: CUR 2.0 reduces data sparsity by collapsing certain columns from CUR into individual columns with key-value pairs of the collapsed columns. The nested keys can optionally be queried in Data Exports as separate columns to match the original CUR schema and data.

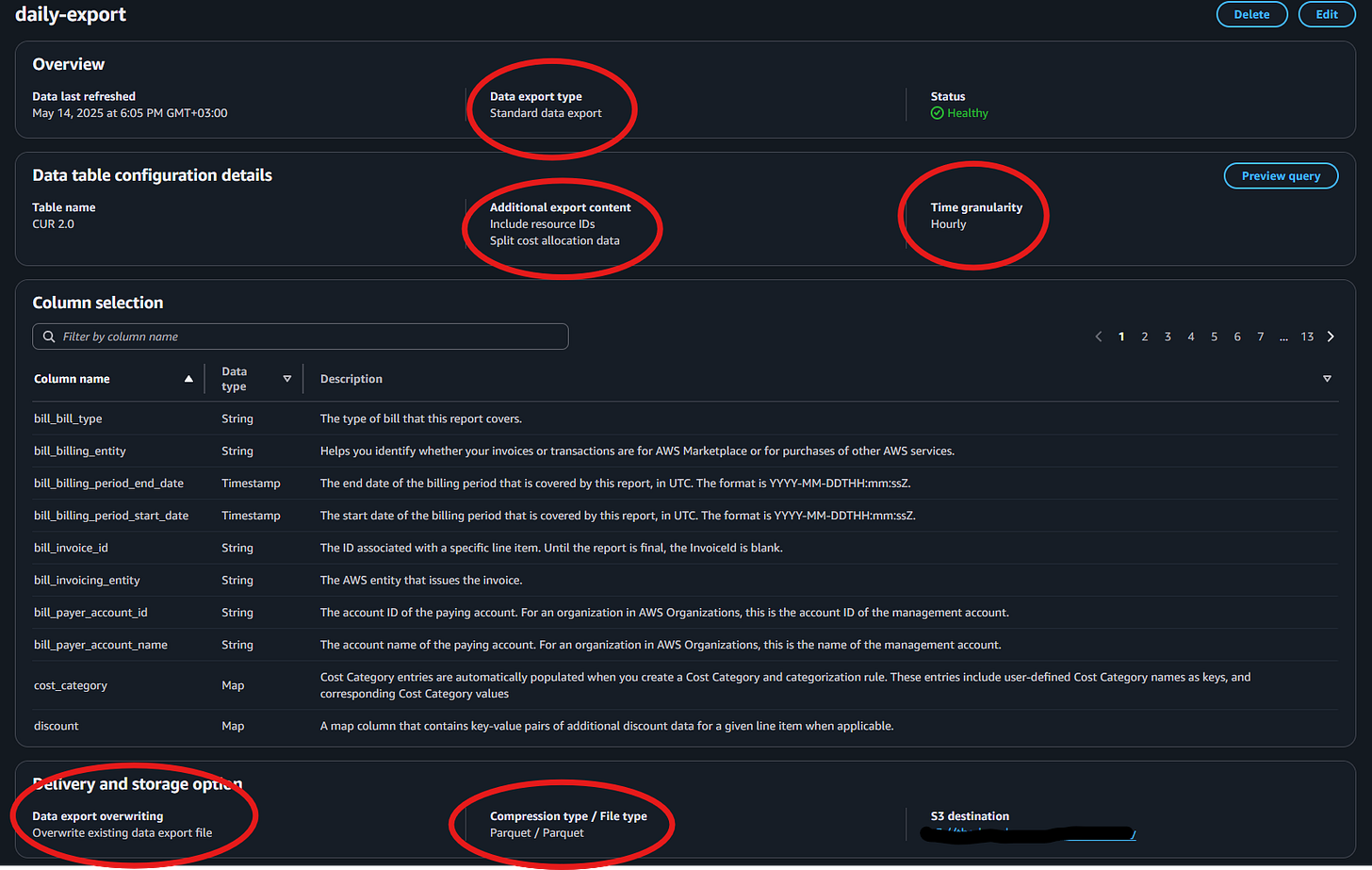

Enabling CUR 2.0 ~ Data Exports

Now let’s enable our CUR 2.0 in our AWS account. You can follow this guide from AWS, which shows how to do that via the console, but you can always use the CLI.

First, we need to create a bucket, make sure you put a proper bucket name here:

aws s3 mb s3://my-cur-bucket-example --region eu-central-1Then we need to attach a bucket policy so that Data Exports can write to the bucket. You can save the following to a .json file, let’s call it bucket-policy.json

{

"Version": "2012-10-17",

"Statement": [

{

"Sid": "EnableAWSDataExportsToWriteToS3AndCheckPolicy",

"Effect": "Allow",

"Principal": {

"Service": [

"billingreports.amazonaws.com",

"bcm-data-exports.amazonaws.com"

]

},

"Action": [

"s3:PutObject",

"s3:GetBucketPolicy"

],

"Resource": [

"arn:aws:s3:::${bucket_name}/*",

"arn:aws:s3:::${bucket_name}"

],

"Condition": {

"StringLike": {

"aws:SourceAccount": "${accountId}",

"aws:SourceArn": [

"arn:aws:cur:us-east-1:${accountId}:definition/*",

"arn:aws:bcm-data-exports:us-east-1:${accountId}:export/*"

]

}

}

}

]

}Replace ${bucket_name} with the name you used above and ${accountId} with your AWS account ID.

Then we want to attach this policy to our newly created bucket. Make sure you put the correct bucket name in the command.

aws s3api put-bucket-policy --bucket my-cur-bucket-example --policy file://bucket-policy.jsonAfter we have our bucket, we can set up the data export. Follow this guide. At this point, you should have a CUR 2.0 data export, and within 24 hours, it will start generating data.

Disclaimer: There is no backfill option, meaning you will start seeing data from the day you enabled the data export.

Setting the stage - Terraform

As we can see, we will create the following resources. The Terraform files and sample queries can be found in the GitHub repo.

2 S3 buckets: One for Athena to save the query results and one for Athena data in case we want to CTAS. The third bucket is the one we created in the previous section.

A Glue Crawler to catalog the CUR 2.0 data to Glue

Amazon Athena workgroup and database for the CUR table

And a Lambda that will be triggered monthly and send us an email report with the untagged resources. The Lambda is dockerized.

You can configure the infrastructure by editing the locals.tf file.

Update

aws_regionto your preferred regionSet

nameto your project's nameSet

cur_bucket_nameto your CUR bucket nameSet

cur_prefixto your CUR data prefixMake sure

table_namecur_{data},datais the folder Glue is lookingSet

sender_emailandrecipient_emailsfor the Lambda to send the cur reportSet the

tag_key_to_analyzeto the tag you want to make sure you are searching for

I will skip the creation of the S3 Buckets, AWS Glue Crawler, and Amazon Athena, since it is the same as my previous post. Feel free to follow the guide there.

Let’s first add an identity to SES to send our email reports.

resource "aws_ses_email_identity" "sender" {

email = local.sender_email

}We will need to verify our identity in order to send emails.

Then we will create the Lambda function and the Docker image. We use Anton’s Terraform modules to make our lives easier.

# ECR Docker image for Lambda

module "docker_image" {

source = "terraform-aws-modules/lambda/aws//modules/docker-build"

ecr_repo = module.ecr.repository_name

source_path = "${path.module}/lambdas"

use_image_tag = true

}

module "ecr" {

source = "terraform-aws-modules/ecr/aws"

repository_name = local.ecr_repository_name

repository_force_delete = true

create_lifecycle_policy = false

repository_lambda_read_access_arns = [module.lambda_function.lambda_function_arn]

}

# Lambda function using terraform-aws-modules/lambda/aws module

module "lambda_function" {

source = "terraform-aws-modules/lambda/aws"

version = "~> 7.20"

function_name = local.lambda_name

description = local.lambda_description

# Docker image config

create_package = false

image_uri = module.docker_image.image_uri

package_type = "Image"

# Lambda settings

timeout = 300

memory_size = 512

# Environment variables

environment_variables = {

DATABASE_NAME = aws_athena_database.athena_database.name

TABLE_NAME = local.table_name

WORKGROUP = aws_athena_workgroup.athena_workgroup.name

OUTPUT_BUCKET = module.athena_results_bucket.s3_bucket_id

SENDER_EMAIL = local.sender_email

RECIPIENT_EMAILS = local.recipient_emails

TAG_KEY_TO_ANALYZE = local.tag_key_to_analyze

}

# IAM policy statements

attach_policy_statements = true

policy_statements = {

cloudwatch_logs = {

effect = "Allow",

actions = [

"logs:CreateLogGroup",

"logs:CreateLogStream",

"logs:PutLogEvents"

],

resources = ["arn:aws:logs:*:*:*"]

},

athena = {

effect = "Allow",

actions = [

"athena:StartQueryExecution",

"athena:GetQueryExecution",

"athena:GetQueryResults"

],

resources = ["*"]

},

glue = {

effect = "Allow",

actions = [

"glue:GetDatabase",

"glue:GetDatabases",

"glue:GetTable",

"glue:GetTables",

"glue:GetPartition",

"glue:GetPartitions",

"glue:BatchGetPartition"

],

resources = ["*"]

},

s3 = {

effect = "Allow",

actions = [

"s3:GetObject",

"s3:ListBucket",

"s3:GetBucketLocation",

"s3:PutObject"

],

resources = [

"arn:aws:s3:::${module.athena_results_bucket.s3_bucket_id}",

"arn:aws:s3:::${module.athena_results_bucket.s3_bucket_id}/*"

]

},

s3_cur = {

effect = "Allow",

actions = [

"s3:GetObject",

"s3:ListBucket",

"s3:GetBucketLocation"

],

resources = [

"arn:aws:s3:::${local.cur_bucket_name}",

"arn:aws:s3:::${local.cur_bucket_name}/*"

]

},

ses = {

effect = "Allow",

actions = [

"ses:SendEmail",

"ses:SendRawEmail"

],

resources = ["*"]

}

}

tags = local.tags

}In the code above, we first set up the Docker build and push it to ECR. Then we create the Lambda function alongside all the permissions it needs to execute its job.

Last but not least, we introduce the scheduling part of our architecture by utilizing a CloudWatch event rule.

# CloudWatch Event Rule to trigger Lambda on a schedule (monthly)

resource "aws_cloudwatch_event_rule" "monthly_trigger" {

name = "lambda-monthly-trigger"

description = "Triggers the untagged resources reporter Lambda function on the 3rd day of each month"

schedule_expression = "cron(0 8 3 * ? *)" # 8:00 AM UTC on the 3rd day of each month

tags = local.tags

}

# CloudWatch Event Target

resource "aws_cloudwatch_event_target" "lambda_target" {

rule = aws_cloudwatch_event_rule.monthly_trigger.name

target_id = "TriggerLambda"

arn = module.lambda_function.lambda_function_arn

}

# Lambda permission to allow CloudWatch Events to invoke the function

resource "aws_lambda_permission" "allow_cloudwatch" {

statement_id = "AllowExecutionFromCloudWatch"

action = "lambda:InvokeFunction"

function_name = module.lambda_function.lambda_function_name

principal = "events.amazonaws.com"

source_arn = aws_cloudwatch_event_rule.monthly_trigger.arn

}In the code above, we create a CloudWatch event rule triggered at 8:00 AM UTC every 3rd day of the month. 3rd day because we want to make sure all the data points from CUR are available from the previous month, usually it takes 24 hours. Then, we create the target and the permissions to CloudWatch to trigger the Lambda function.

And that’s it. You are now ready to deploy the infrastructure.

terraform planand once we validate the plan, we can run

terraform applyIf we are impatient and want to see the work we’ve done, we can trigger the Lambda function with a test and see the results.

As you can see, I vibe-coded the sh*t out of it for the report visuals 😅😅😅Who likes to write HTML in python for emails? Let me know in the comments below!

You can find the Lambda code inside the lambdas folder.

You can modify the Lambda function to do whatever you like, you now have all of the CUR data at your fingertips 🤤

Useful queries

In this section, I will showcase some Athena queries on the CUR 2.0 data that I found helpful. The complete collection of the CUR queries can be found here.

You will notice these lines in some queries I show you. Pretty much this excludes the credits to show some data 😅 If you want the credits to be applied in your queries, remove those lines.

line_item_line_item_type != 'Credit' AND

line_item_line_item_type != 'Refund'S3 Costs by bucket and usage type

SELECT

line_item_resource_id AS bucket_name,

line_item_usage_type AS usage_type,

SUM(line_item_unblended_cost) AS cost

FROM cur_data

WHERE

line_item_product_code = 'AmazonS3' AND

line_item_resource_id <> '' AND

bill_billing_period_start_date = DATE '2025-05-01' AND

line_item_line_item_type != 'Credit' AND

line_item_line_item_type != 'Refund'

GROUP BY 1, 2

ORDER BY 3 DESC

LIMIT 20;Saving from Reserved Instances

SELECT

bill_billing_period_start_date AS billing_period,

reservation_reservation_a_r_n AS reservation_arn,

SUM(reservation_effective_cost) AS effective_cost,

SUM(reservation_unused_amortized_upfront_fee_for_billing_period) AS unused_upfront_fee,

SUM(reservation_unused_recurring_fee) AS unused_recurring_fee

FROM cur_data

WHERE

reservation_reservation_a_r_n <> '' AND

bill_billing_period_start_date = DATE '2025-05-01'

GROUP BY 1, 2

ORDER BY 3 DESC;Identify untagged resources

SELECT

line_item_product_code AS service,

line_item_resource_id AS resource_id,

product_region_code AS region,

SUM(line_item_unblended_cost) AS cost

FROM cur_data

WHERE

CARDINALITY(MAP_KEYS(resource_tags)) = 0 AND

line_item_resource_id <> '' AND

line_item_line_item_type = 'Usage' AND

bill_billing_period_start_date = DATE '2025-05-01'

GROUP BY 1, 2, 3

HAVING SUM(line_item_unblended_cost) > 0

ORDER BY 4 DESC

LIMIT 50;Cost trend current vs previous month

SELECT

'2025-05' AS current_month,

'2025-04' AS previous_month,

SUM(CASE WHEN bill_billing_period_start_date = DATE '2025-05-01' AND line_item_line_item_type NOT IN ('Credit', 'Refund') THEN line_item_unblended_cost ELSE 0 END) AS may_cost,

SUM(CASE WHEN bill_billing_period_start_date = DATE '2025-04-01' AND line_item_line_item_type NOT IN ('Credit', 'Refund') THEN line_item_unblended_cost ELSE 0 END) AS april_cost,

SUM(CASE WHEN bill_billing_period_start_date = DATE '2025-05-01' AND line_item_line_item_type NOT IN ('Credit', 'Refund') THEN line_item_unblended_cost ELSE 0 END) -

SUM(CASE WHEN bill_billing_period_start_date = DATE '2025-04-01' AND line_item_line_item_type NOT IN ('Credit', 'Refund') THEN line_item_unblended_cost ELSE 0 END) AS cost_difference

FROM cur_data

WHERE

bill_billing_period_start_date IN (DATE '2025-05-01', DATE '2025-04-01');Identify resources with the highest cost increase

Weekly analysis for the last 2 weeks

WITH current_week_costs AS (

SELECT

line_item_resource_id AS resource_id,

SUM(line_item_unblended_cost) AS cost

FROM cur_data

WHERE

line_item_usage_start_date BETWEEN DATE('2025-05-12') AND DATE('2025-05-18') AND

line_item_resource_id <> ''

GROUP BY 1

),

prev_week_costs AS (

SELECT

line_item_resource_id AS resource_id,

SUM(line_item_unblended_cost) AS cost

FROM cur_data

WHERE

line_item_usage_start_date BETWEEN DATE('2025-05-05') AND DATE('2025-05-11') AND

line_item_resource_id <> ''

GROUP BY 1

),

resource_details AS (

SELECT DISTINCT

line_item_resource_id AS resource_id,

line_item_product_code AS service,

product_region_code AS region

FROM cur_data

WHERE

line_item_resource_id <> '' AND

line_item_usage_start_date BETWEEN DATE('2025-05-05') AND DATE('2025-05-18')

)

SELECT

r.resource_id,

r.service,

r.region,

-- Week costs

COALESCE(p.cost, 0) AS prev_week_cost,

COALESCE(c.cost, 0) AS current_week_cost,

COALESCE(c.cost, 0) - COALESCE(p.cost, 0) AS week_over_week_change,

-- Percentage change

CASE

WHEN COALESCE(p.cost, 0) > 0

THEN ROUND(((COALESCE(c.cost, 0) - COALESCE(p.cost, 0)) / COALESCE(p.cost, 0)) * 100, 2)

WHEN COALESCE(p.cost, 0) = 0 AND COALESCE(c.cost, 0) > 0

THEN NULL

ELSE 0

END AS percentage_change,

-- Growth classification

CASE

WHEN COALESCE(p.cost, 0) = 0 AND COALESCE(c.cost, 0) > 0

THEN 'New Resource'

WHEN COALESCE(c.cost, 0) - COALESCE(p.cost, 0) > 0

THEN 'Increasing'

WHEN COALESCE(c.cost, 0) - COALESCE(p.cost, 0) < 0

THEN 'Decreasing'

ELSE 'Stable'

END AS cost_trend

FROM resource_details r

LEFT JOIN current_week_costs c ON r.resource_id = c.resource_id

LEFT JOIN prev_week_costs p ON r.resource_id = p.resource_id

WHERE

-- Show resources with costs in either week

COALESCE(c.cost, 0) > 0 OR COALESCE(p.cost, 0) > 0

ORDER BY week_over_week_change DESC

LIMIT 20;Athena usage and cost by workgroup and operation

SELECT

CAST(line_item_usage_start_date AS DATE) AS usage_date,

line_item_operation AS operation,

line_item_resource_id AS workgroup,

SUM(line_item_usage_amount) AS data_scanned_bytes,

SUM(line_item_unblended_cost) AS cost

FROM cur_data

WHERE

line_item_product_code = 'AmazonAthena' AND

bill_billing_period_start_date = DATE '2025-05-01'

GROUP BY 1, 2, 3

ORDER BY 1 DESC, 5 DESC;List all tags with their associated cost

WITH flattened_tags AS (

SELECT

line_item_resource_id,

line_item_unblended_cost,

k AS tag_key,

resource_tags[k] AS tag_value

FROM cur_data

CROSS JOIN UNNEST(MAP_KEYS(resource_tags)) AS t(k)

WHERE

bill_billing_period_start_date = DATE '2025-05-01'

line_item_resource_id <> '' AND

resource_tags[k] <> ''

)

SELECT

tag_key,

tag_value,

COUNT(DISTINCT line_item_resource_id) AS resource_count,

SUM(line_item_unblended_cost) AS total_cost

FROM flattened_tags

GROUP BY 1, 2

ORDER BY 1, 4 DESC;Tagged vs Untagged resources and their cost

This is one of the queries we used in the Lambda, edit the tag_key_to_search and then you can see how many resources are not tagged with that particular tag and how many they are tagged properly.

WITH tag_key_to_search AS (

SELECT 'user_creator' AS key -- Change this to your desired tag key

),

resource_tagging AS (

SELECT

line_item_product_code AS service,

line_item_resource_id,

line_item_unblended_cost,

CASE

WHEN CARDINALITY(MAP_KEYS(resource_tags)) > 0 THEN 'Tagged'

ELSE 'Untagged'

END AS general_tagging_status,

CASE

WHEN resource_tags[(SELECT key FROM tag_key_to_search)] IS NOT NULL

AND resource_tags[(SELECT key FROM tag_key_to_search)] <> '' THEN 'Has Tag Key'

ELSE 'Missing Tag Key'

END AS specific_tag_status

FROM cur_data

WHERE

line_item_resource_id <> '' AND

bill_billing_period_start_date = DATE '2025-05-01' AND

line_item_line_item_type != 'Credit' AND

line_item_line_item_type != 'Refund' AND

line_item_line_item_type = 'Usage'

)

SELECT

service,

general_tagging_status,

specific_tag_status,

COUNT(DISTINCT line_item_resource_id) AS resource_count,

SUM(line_item_unblended_cost) AS total_cost

FROM resource_tagging

GROUP BY 1, 2, 3

HAVING COUNT(DISTINCT line_item_resource_id) > 0

ORDER BY 1, 2, 3;The other query used for the Lambda, listing the untagged services, is query 15 in the sample_queries.sql file.

To generate some of the queries, I’ve used an LLM, and it seems that, providing the right context (and some editing after the proposed query), you can pretty much effortlessly create queries on top of the CUR 2.0. It is much nicer than searching 300+ (legacy cur) columns and their definition to start using the data.

Disclaimer: While vibe-coding is trendy and can bootstrap your code, please do not forget to take a closer look at your query and understand that the fields being queried are correct.

In my queries, I’ve used the unblinded cost, which is the raw, undiscounted cost.

CUR 2.0 dictionary: https://docs.aws.amazon.com/cur/latest/userguide/table-dictionary-cur2.html

Here is a very nice cheat sheet to help you better understand the AWS Cost Dataset.

Conclusion

Well, that’s all, folks. I hope you found this post helpful and that it leaves you with a bit more knowledge and confidence when navigating your AWS cost reports.

It will be a shame not to mention some already existing automation/dashboard/etc, a considerable effort from the AWS team to streamline and trivialize FinOps (to an extent of course.)

Here you can find the Cloud Intelligence dashboards.

Here you can find a cost optimization workshop.

Feel free to reach out if you encounter any problems or have suggestions.

Till the next time, stay safe and have fun! ❤️